Apache Airflow Task SDK¶

The Apache Airflow Task SDK provides python-native interfaces for defining Dags, executing tasks in isolated subprocesses and interacting with Airflow resources (e.g., Connections, Variables, XComs, Metrics, Logs, and OpenLineage events) at runtime. It also includes core execution-time components to manage communication between the worker and the Airflow scheduler/backend.

The goal of task-sdk is to decouple Dag authoring from Airflow internals (Scheduler, API Server, etc.), providing a forward-compatible, stable interface for writing and maintaining Dags across Airflow versions. This approach reduces boilerplate and keeps your Dag definitions concise and readable.

1. Introduction and Getting Started¶

Below is a quick introduction and installation guide to get started with the Task SDK.

Installation¶

To install the Task SDK, run:

pip install apache-airflow-task-sdk

Getting Started¶

Define a basic Dag and task in just a few lines of Python:

/opt/airflow/airflow-core/src/airflow/example_dags/example_simplest_dag.py

from airflow.sdk import dag, task

@dag

def example_simplest_dag():

@task

def my_task():

pass

my_task()

2. Public Interface¶

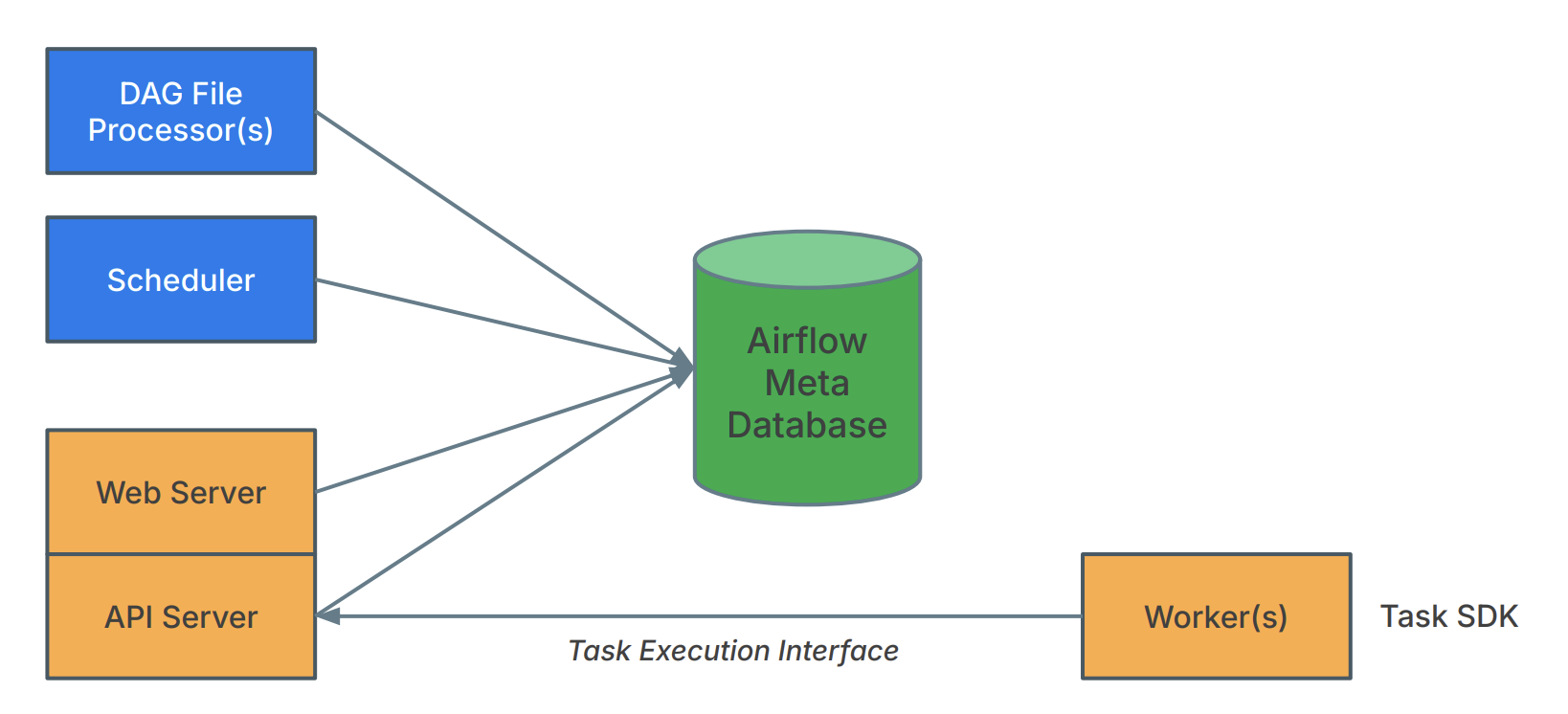

Direct metadata database access from task code is now restricted. A dedicated Task Execution API handles all runtime interactions (state transitions, heartbeats, XComs, and resource fetching), ensuring isolation and security.

Airflow now supports a service-oriented architecture, enabling tasks to be executed remotely via a new Task Execution API. This API decouples task execution from the scheduler and introduces a stable contract for running tasks outside of Airflow’s traditional runtime environment.

To support remote execution, Airflow provides the Task SDK — a lightweight runtime environment for running Airflow tasks in external systems such as containers, edge environments, or other runtimes. This lays the groundwork for language-agnostic task execution and brings improved isolation, portability, and extensibility to Airflow-based workflows.

Airflow 3.0 also introduces a new airflow.sdk namespace that exposes the core authoring interfaces for defining Dags and tasks. Dag authors should now import objects like airflow.sdk.DAG, airflow.sdk.dag(), and airflow.sdk.task() from airflow.sdk rather than internal modules. This new namespace provides a stable, forward-compatible interface for Dag authoring across future versions of Airflow.

4. Example Dag References¶

Explore a variety of Dag examples and patterns in the Examples page.

5. Concepts¶

Discover the fundamental concepts that Dag authors need to understand when working with the Task SDK, including Airflow 2.x vs 3.x architectural differences, database access restrictions, and task lifecycle. For full details, see the Concepts page.

Airflow 2.x Architecture¶

Architectural Decoupling: Task Execution Interface (Airflow 3.x)¶

6. API References¶

For the full public API reference, see the airflow.sdk API Reference page.